Concerns are mounting over the rise of deepfake scams in the wake of the hack of U.S. file transfer software firm Progress Corp in June

In June 2023, a Russian-speaking hacking collective known as Cl0p breached the U.S. file transfer software provider Progress Corp., stealing sensitive data from hundreds of organizations, including British Airways, Shell, and PwC.

Initially expected to attempt to extort victimized organizations by launching digital blackmail, the Cl0p hackers have since turned to using the stolen information to generate advanced ‘deepfakes’ of corporate executives.

The deceptive replicas are being employed to send out fraudulent emails, disguised to resemble authentic communication from legitimate sources—usually the CEOs office—instructing recipients to wire funds to a fake account. However, other than expected hackers did not attempt to extort affected organisations with threats to release data in exchange for ransom.

Haywood Talcove, chief executive of LexisNexis Risk Solutions’ Government division, claims the stolen personal data – including photos, driving licenses, dates of birth, and health and pension information – could be used with software to create fraudulent video selfies commonly used by U.S. state agencies to verify identities. Talcove further believes that one fake identity could defraud the government of up to $2 million.

“I am not a criminal, but I’ve been studying this for a long time — if I had this much information, and it was so pristine, the sky is the limit”, said Talcove.

Miami-based KYC verification platform Sumsub, which recently released a deepfake detection tool, reported a surge in deepfakes, “outstripping all of 2023” fraud cases with notable upticks in the UK, EU, Canada and US.

Responding to a post by Professor David Maimon, Proactive Fraud Intelligence at Georgia State University on LinkedIn Sumsub said:

Verifying not only documents but also users is becoming more complex, especially because of the growing risk of fake docs and deepfakes, and we know this field is snowballing.

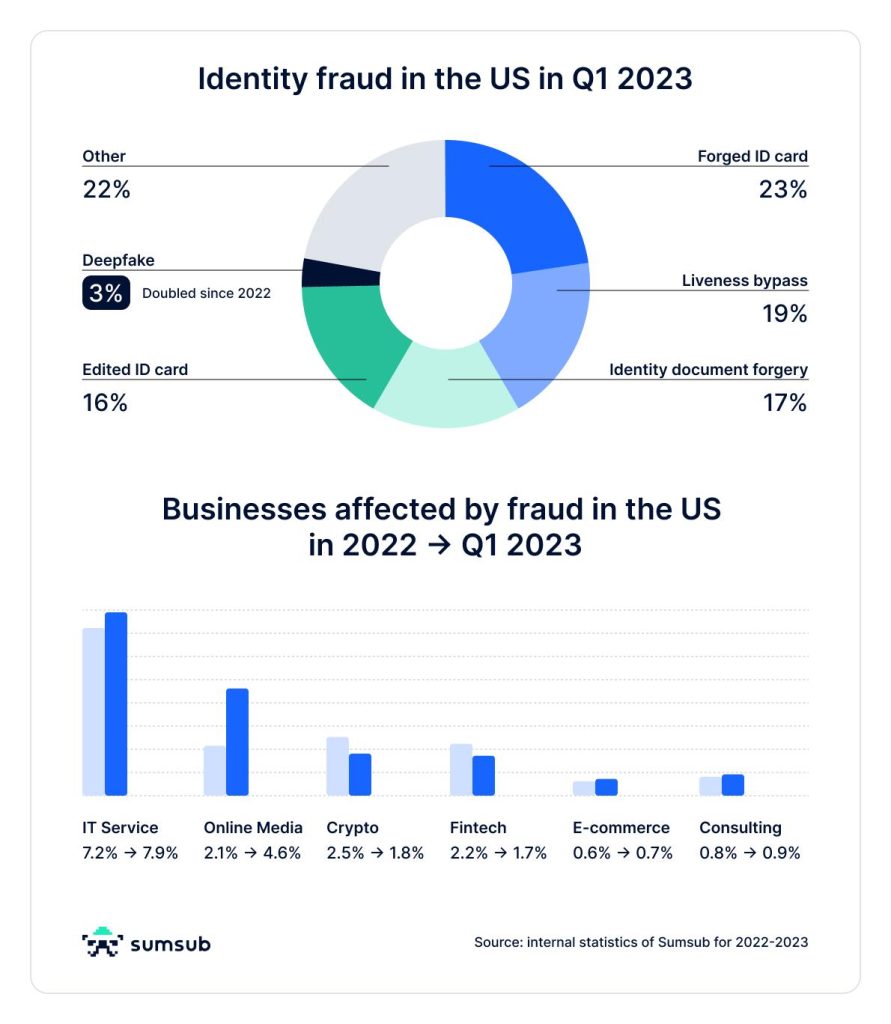

Our statistics show that last quarter, the top three fraud types in the UK and EU were identity document forgery, liveness bypass, and edited ID cards. Same in the U.S. and Canada.

You can look closer at the stats in the picture below and our reports on U.S.&Canada 👉 https://sumsub.link/zvq

and UK&EU 👉 https://sumsub.link/ppr

So this topic is a vast concern for companies and individual users, showing that simple #kyc checks are not enough anymore. Companies must perform checks throughout the user journey to stay protected from fraud.

For us, as a verification platform, it’s a sign to improve our verification tools constantly. 🤲

Illustration: Identity fraud in the US in Q1 2023 (sumsub)

Source: sumsub.com

While Jumio warned that consumers are overconfident in their ability to spot them. To combat this new form of fraud, Sun Xueling, a Minister in the Singapore government, suggested introducing scam detection registries, better fraud surveillance, and cross-border data sharing.

To protect individuals and organizations, numerous biometrics-related firms are rolling out solutions to detect deepfake fraud. VisionLabs launched a deepfake detection product with an accuracy rate of 92-100 percent, ElevenLabs unveiled an audio-sample uploading tool, and Daon, Paravision, and ID R&D have all released tools to tackle the threat.

While voice and gesture-based payments, such as those outlined in Finance Magnates, have the potential to enhance customer experience, proper measures such as user education, multi-factor authentication, and AI-powered defense mechanisms should be implemented to guard against deepfake fraud.

Isn’t it Ironic?

The irony of artificial intelligence (AI) being employed to combat deepfakes is a reminder that, as with any tool, it can be used for good or ill. Right now, AI is being used to try to thwart this new form of deception.

However, as the sophistication of deepfake technology continues to rise, the threat of fraud, misinformation, financial losses and public manipulation is greater than ever. AI-driven security measures and public education about deepfakes are essential to staying ahead of the curve and preventing costly losses. Deepfakes pose a real danger that must be addressed with urgency.